On August 25,she striped down to her thong eroticism story Alibaba Cloud launched an open-source Large Vision Language Model (LVLM) named Qwen-VL. The LVLM is based on Alibaba Cloud’s 7 billion parameter foundational language model Qwen-7B. In addition to capabilities such as image-text recognition, description, and question answering, Qwen-VL introduces new features including visual location recognition and image-text comprehension, the company said in a statement. These functions enable the model to identify locations in pictures and to provide users with guidance based on the information extracted from images, the firm added. The model can be applied in various scenarios including image and document-based question answering, image caption generation, and fine-grained visual recognition. Currently, both Qwen-VL and its visual AI assistant Qwen-VL-Chat are available for free and commercial use on Alibaba’s “Model as a Service” platform ModelScope. [Alibaba Cloud statement, in Chinese]

Related Articles

2025-06-27 09:00

2286 views

Then and Now: Six Generations of $200 Mainstream Radeon GPUs Compared

A few weeks ago we published our latest feature in the 'Then and Now' series, testing and comparing

Read More

2025-06-27 07:46

2111 views

Wild Desire by Pedro Lemebel

Wild DesireBy Pedro LemebelMay 21, 2024First PersonAbstract 2from Awashby Will Steacy, a portfolio p

Read More

2025-06-27 07:39

562 views

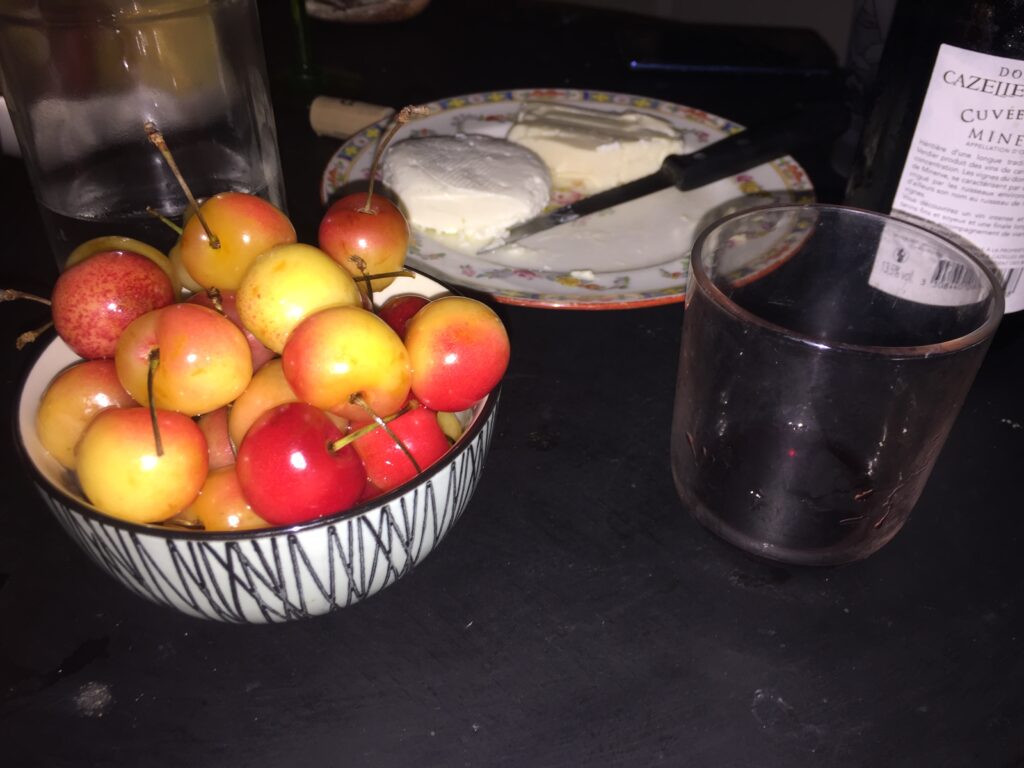

Emma's Last Night by Jacqueline Feldman

Emma’s Last NightBy Jacqueline FeldmanMay 2, 2024Dinner PartiesPhotograph by Jacqueline Feldma

Read More